- AI with Armand

- Posts

- The Pillars of Trustworthy AI

The Pillars of Trustworthy AI

with Case Studies included!

Welcome to the 126 new members this week! This newsletter now has 44,856 subscribers.

As businesses increasingly rely on AI to drive decision-making, the technology must be trustworthy. But what exactly does that mean? Here, we'll explore the core pillars of trustworthy AI: explainability, fairness, and security.

Today, I’ll cover:

Pillar #1: Explainability

Pillar #2: Fairness

Pillar #3: Security

5 Questions to Ask Before You Trust an AI Model

Tooling to manage responsible AI

Let’s Dive In! 🤿

Pillar #1: Explainability

For AI to be trustworthy, it must be explainable. Users should understand how the system arrived at a particular decision. Suppose a company uses AI to decide which customers to offer credit to, for example. In that case, data scientists should be able to trace the system's path from input (customer information) to output (decision). Otherwise, there's no way to know whether the AI behaves as it should—or if bias has crept into its decision-making.

Pillar #2: Fairness

Fairness is closely related to explainability. To ensure that an AI system behaves fairly, data scientists must understand how it makes decisions. If they can't track the path from input to output, they can't tell whether the system discriminates against certain groups of people.

Pillar #3: Security

AI systems are only as secure as the data they're trained on. If that data is hacked or leaked, the resulting algorithm will be just as vulnerable. Remember that training data often contains sensitive information about people, which could be used for identity theft or other malicious purposes. For this reason, companies must take steps to secure their data—and their AI systems—against potential threats.

5 Questions to Ask Before You Trust an AI Model

With all the talk about artificial intelligence (AI), it's no wonder business professionals are eager to implement AI solutions in their organizations. After all, AI has the potential to revolutionize nearly every industry. But before you jump on the AI bandwagon, it's important to ask some tough questions about the AI models you're considering. Here are 5 questions to ask before you trust an AI model:

How was the AI model developed?

Understanding how an AI model was developed is important before you trust it. Did a reputable source develop the model? Was it developed using sound statistical methods? If you're not sure, consult with someone knowledgeable about AI development.What data was used to train the AI model?

The data used to train an AI model is just as important as the methods used to develop it. After all, garbage in equals garbage out. Be sure to ask about the data quality used to train the model. Is it representative of the real-world data fed into the model when deployed?How well does the AI model generalize to new data?

Even if an AI model performs well on training data, that doesn't necessarily mean it will perform just as well on new, real-world data. That's why evaluating how well an AI model generalizes to new data is important before you trust it.How transparent is the AI model?

Some organizations are reluctant to use AI because they don't understand how it works. If you're in one of those organizations, be sure to ask about the transparency of the AI models you're considering. Can you get a simple explanation of how they work? Or do they use a "black box" approach that makes them inscrutable?Who is responsible for the AI model?

Finally, ask about responsibility for the AI models you're considering. Who will be responsible for monitoring them and ensuring that they operate as intended? Who will be held accountable if something goes wrong? These are important questions to consider before you trust an AI solution.

Tooling to manage responsible AI

IBM recently announced IBM watsonx.governance, a new, one-stop solution that works with any organization's current data science platform. This solution includes everything needed to develop a consistent, transparent model management process, including capturing model development time, metadata, post-deployment model monitoring, and customized workflows.

Watch this video from IBM CEO talking about Responsible AI for Business

I recommend you check AI FactSheets 360, a project from IBM Research that fosters trust in AI by increasing transparency and enabling the governance of models. A FactSheet provides all the relevant information (facts) about creating and deploying AI models or services. Model Cards are also popular in the community as a great way to document and provide all the key information about a machine-learning model. For example, find the model card of the new llama 3, one of the market's most popular Large Language Models now.

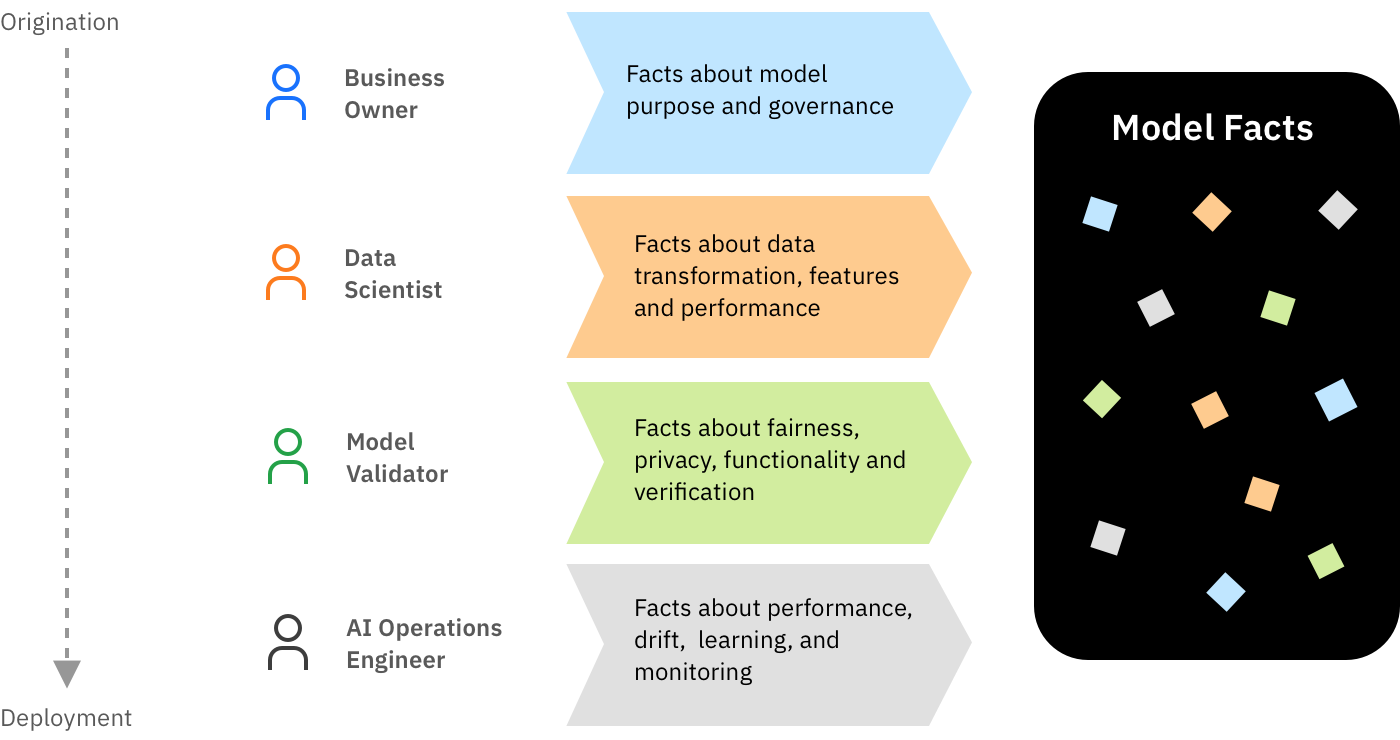

The image below shows how AI Facts are captured across all the activities in the AI lifecycle for all the roles involved.

AI model facts being generated by different AI lifecycle roles (image from IBM AIFS360)

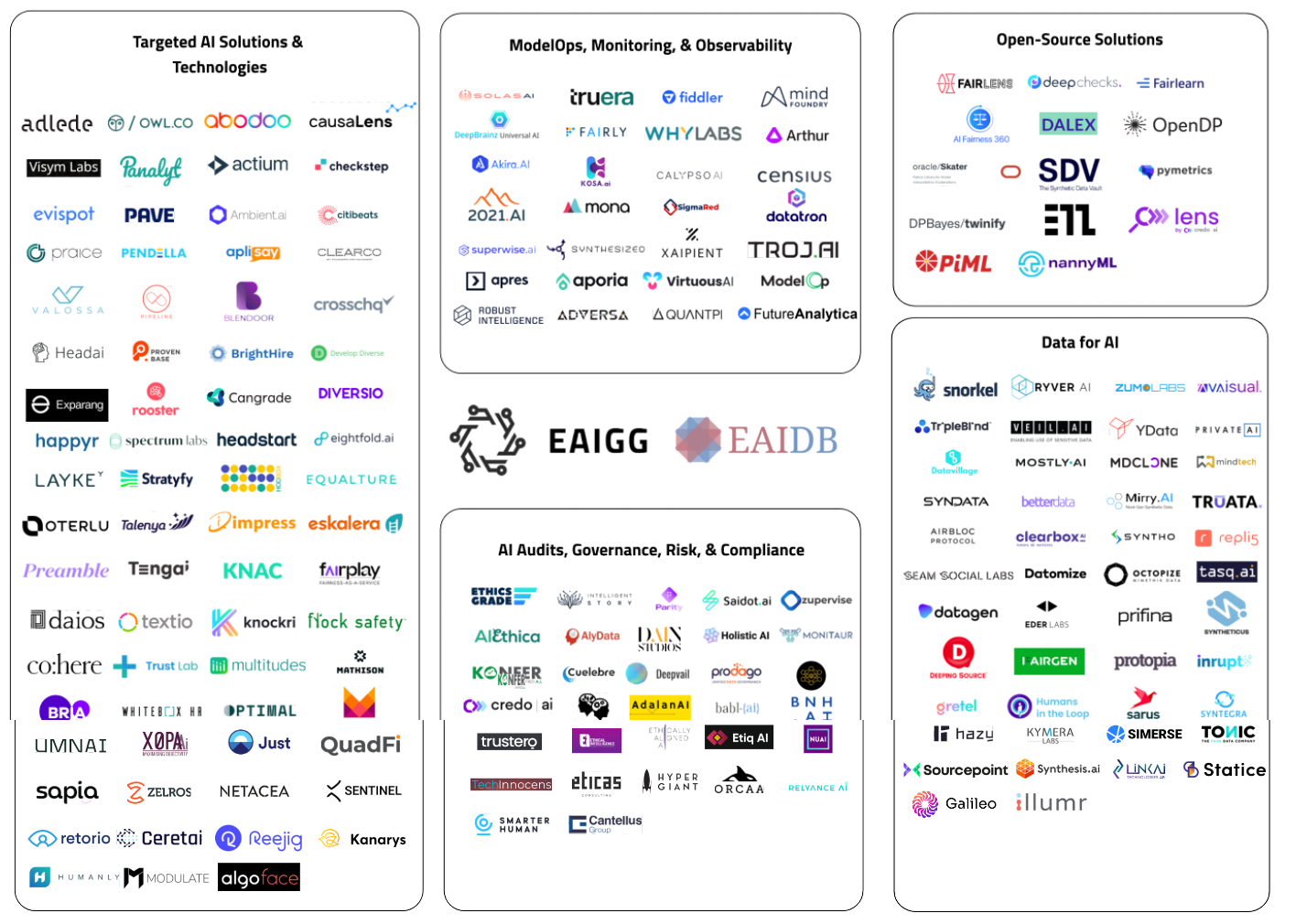

There are also some great startups in the space. For example, Credo.ai empowers organizations to create AI with the highest ethical standards. My friend Susannah Shattuck is doing a great job leading Product Management and raising the bar.

The Ethical AI Database project (EAIDB) is the only publicly available, vetted database of AI startups providing ethical services.

Ethical AI Startup Landscape provided by EAIDB as of Q2 2022

It's time to manage production AI at scale

AI has enormous potential, but asking some tough questions before implementing an AI solution in your organization is important. Before putting your trust in a model, consider how it was developed, what data was used to train it, how well it generalizes the data, how transparent it is, and who is responsible for it. Considering these factors will help ensure you get the most out of your investment in artificial intelligence.

To build trust in AI, businesses must focus on three key areas: explainability, fairness, and security. By paying attention to these pillars of trustworthy AI, companies can help ensure that their algorithms behave as they should and are not biased against certain groups of people. In today's data-driven world, that's more important than ever.

Enjoy the weekend, folks!

Armand

Whenever you're ready, learn AI with me:

The 15-day Generative AI course: Join my 15-day Generative AI email course, and learn with just 5 minutes a day. You'll receive concise daily lessons focused on practical business applications. It is perfect for quickly learning and applying core AI concepts. 15,000+ Business Professionals are already learning with it.

Reply